Nations must adopt Artificial Intelligence as a mechanism to build transparency, integrity, and trustworthiness, which are necessary to fight corruption. Without effective public scrutiny, the risk of money being lost to corruption and misappropriation was vast. Dr Chris Kpodar, a global Artificial Intelligence Specialist, has advocated the use of artificial intelligence as an anti-corruption tool through the redesigning of systems to address systems that were previously prone to bribery and corruption.

Artificial Intelligence Tools

Artificial Intelligence has become popular due to its increasing applications in many fields. Recently, IIT Madras opened a course on B.Tech Data Science in Tanzania, demonstrating the popularity of Artificial Intelligence. The history of Artificial Intelligence goes back to the 1950s when computing power was less, and hardware were huge. These days, computing power has increased exponentially along with the miniaturisation of hardware, leading to algorithms being able to compute larger datasets. The field of AI, however, has gone through ups and downs in terms of popularity.

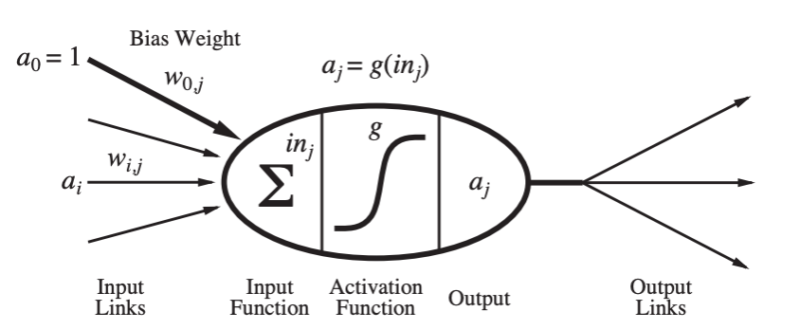

Researchers have worked on Neural Networks (Figure below), a mathematical model modelled after neurons in the brain, a foundation unit, and one of the foundations of state-of-the-art AI.

Artificial intelligence (AI), machine learning, deep learning, and data science are popular terms that describe computing fields that teach a machine how to learn. AI is a catch-all term that broadly means computing systems designed to understand and replicate human intelligence. Machine Learning is a subfield of AI where algorithms are trained on datasets to make predictions or decisions without explicitly being programmed. Deep Learning is a subfield of Machine Learning, which specifically refers to using multi-layers of neural networks to learn from large datasets, mimicking cognition of the neurons in the brain. Recently, the field of AI has resurged in popularity after a popular type of neural network architecture, AlexNET, achieved impressive results in the Image Recognition Challenge in 2012. Since then, neural networks have started to enter into applications in the industry, with colossal research funding mobilised.

Breakthroughs that can aid Policy Implementation

There are many types of neural networks, each designed for a particular application. The recent popularity of applications like ChatGPT is due to a neural network called Language Models. Language Models are probability models which ask the question, what is the next best token to generate, given the previous token?

Two significant breakthroughs led towards ChatGPT, including translating language from one language to another using a machine learning technique called attention mechanism. Secondly, this technique was introduced in transformer-type language models, which led to increased state-of-the-art performance in many tasks in artificial intelligence.

Transformers, a robust neural network, was introduced in 2017 by Google Researchers in “Attention is All You Need”. This translates into generating human-like text in ChatGPT. Large language models have taken a big step in the technology landscape. As Machine Learning applications are being deployed rapidly, it calls for a governance model for these models, as research in AI models is advancing quickly with innumerable breakthroughs. Earlier in 2019, GPT-2, a Machine Learning model based on transformers, could not solve fundamental mathematical problems such as elucidating numbers from 0-100. Within a year, more advancement in the GPT models led to models being able to perform higher-level scores in SAT exams, GRE, etc. Another breakthrough advancement was the ability of machine-learning programs to generate code, which has increased developer productivity automatically.

Moreover, many researchers are working on AGI (Artificial General Intelligence), and nobody knows precisely when such capabilities might be developed or researched. Researchers have not settled on a convincing definition of AGI agreeable to everyone in the AI research community. The rate of advancement and investment in AI research is staggering, which calls for ethical concerns and governance of these large language models. India is an emerging economy where all sectors are growing rapidly. India’s economy grows nearly 10% yearly, with the services sector making up almost 50% of the entire economy. This translates to the government enjoying high tax revenues from this sector, generating high-paying jobs. Most of the Indian workforce is employed in the industrial and agricultural sectors.

Using AI to deal with Corruption and enhance Trust

The primary issue in India has been corruption at all levels of the government, from the panchayat, district level, and state level to central machinery. Corruption is attributed mainly to regulation, rent-seeking behaviour, lack of accountability, and requiring permits from the Government. Indian bureaucratic system and government employees are among the least efficient across sectors such as infrastructure, real estate, metal & mining, aerospace & defence, power and utility, which are also most susceptible to corruption. Due to inefficiency, the productivity of the public sector is low, impacting the local Indian economy.

India ranks 85 out of 180 countries using the Corruption Index measured in 2022, with close to 62% of Indians encountering corruption, paying bribes to government officials to get the job done. There are many reasons for corruption in India: excessive regulation, a complicated tax system, bureaucratic hurdles, lack of ownership of work, and the public sector being the least productive organisation. Corruption is dishonest or fraudulent conduct by those in power, typically involving bribery. Bribery is defined generally as corrupt solicitation, acceptance, or transfer of value in exchange for official action. In bribery, there are two actors in the transaction, the giver and the receiver; however, corruption involves primarily one actor who abuses the position of power for personal gain. Bribery is a singular act, while corruption might be an ongoing abuse of power to benefit oneself.

Trust is a critical glue in financial transactions; where trust between individuals is higher, the economic transactions are faster, and the economy grows, with more businesses moving, bringing capital, and increasing the production and exchange of goods. However, when trust is low, businesses hesitate, and the economy either stagnates or declines. High-trust societies like Norway have advanced financial systems, where credit and financial instruments are more developed, compared with lower-trust societies such as Kenya and India, where many financial instruments and capital markets to raise finances are unavailable. Therefore, public policymakers must seek ways to increase trust in their local economies by forming policies conducive to business transactions.

The real-estate sector in Tamilnadu: a fit case for the use of AI

Tamil Nadu is India’s second-largest economy and is the most industrialised and urbanised state in India. Real estate is an economic growth engine and a prime mover of monetary transactions. It is a prime financial asset for most Tamils from many social strata. However, real estate in Tamil Nadu is prone to corruption at many levels. One specific popular method is the forgery of land registration documents, which has resulted in a lack of trust among investors at all levels in Tamil Nadu.

To address this lack of trust, we can use technology tools to increase confidence and empower the public to create an environment of accountability, resulting in greater confidence. Machine Learning can provide algorithms to detect these forgeries and prevent land grabbing. Tools such as identity analysis, document analysis, and transaction pattern analysis can help to provide more accountability. In addition to the above, machine learning offers many methods or combinations of methods that can be used. One advanced way is using transformer-based models, which are the foundation for language models such as BERT and generative Pre-Trained Models for text-based applications. The original documents could be trained using large language models as a baseline to frequently check and find forgeries. Documents can be encoded to compare semantic anomalies between different types of documents.

Once forgery is detected, it can be automatically sent to civil magistrates or pertinent authorities. Additionally, the recent introduction of Software repository sites allows the public to be informed or notice any change in the status or activity. Customised public repositories based on GitHub might create immense value for Tamil Nadu’s Department of Revenue, create accountability, increase productivity and reduce workload. The Customised public repositories displaying land transaction activity might inform the public of such forgeries, thus creating an environment of greater accountability and trust for the people. Another popular method can be introduced by introducing Computer Vision Algorithms, such as convolutional neural networks combined with BERT, that can validate signatures, document tampering, and time-frames to flag forgeries. This can be done by training original documents with specific algorithms and checking documents with reasonable doubts about forgery.

Another primary concern in Tamil Nadu’s Government has been people in positions of power in the government or close to financial oversight. They are more prone to corruption, which can be flagged or monitored using graph neural networks, which can map individuals, connections, and economic transactions in a network to flag which individuals are more likely or prone to corruption. Another method to reduce corruption is to remove personal discretion in the process, which Machine Learning can enable to automate the tasks and documents in land registration; digitisation might help reduce corruption. Large Language Models can also be used as classifiers and released to the public to keep accountability on the Tamil Nadu Government’s spending, so the public is aware and personal gain of Government money can be further reduced this way. Another central area of corruption is the tender, the bidding process for government contracts in Tamil Nadu, such as public development works or engineering projects. Tamil Nadu’s tender or bidding process can be made more public, and machine learning algorithms can be used to check if norms, contracts, and procedures are followed to award tender bids for government projects. To save wasteful expenditure, algorithms can check if objective conditions are met, with any deviations flagged and in the public domain. Given any suspicion, the public can file a PIL in Tamil Nadu’s court system.

We can argue and conclude that with more deployed machine learning tools being part of Tamil Nadu’s State machinery, we can confidently say that corruption can be reduced to more significant levels by releasing all information to the public and creating an environment of greater accountability.

References:

- Russell, Stuart J.; Norvig, Peter. (2021). Artificial Intelligence: A Modern Approach

2.Bau, D., Elhussein, M., Ford, J. B., Nwanganga, H., & Sühr, T. (n.d.). Governance of AI models. Managing AI risks. https://managing-ai-risks.com/

- S. Department of State. (2021). 2021 Country Reports on Human Rights Practices: India. U.S. Department of State. https://www.state.gov/reports/2021-country-reports-on-human-rights-practices/india/

- Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of NAACL-HLT (pp. 4171-4186). https://arxiv.org/abs/1810.04805

- Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019). Language models are unsupervised multitask learners. OpenAI blog, 1(8). https://openai.com/blog/better-language-models/

- Radford, A., Narasimhan, K., Salimans, T., & Sutskever, I. (2018). Improving language understanding by generative pre-training. OpenAI blog, 12. https://openai.com/blog/language-unsupervised/

- Bai, Y., Kadavath, S., Kundu, S., Askell, A., Kernion, J., Jones, A., … Kaplan, J. (2022). Constitutional AI: Harmlessness from AI feedback. arXiv preprint arXiv:2212.08073. https://arxiv.org/pdf/2212.08073.pdf,

https://www.anthropic.com/news/constitutional-ai-harmlessness-from-ai-feedback

- Reinforcement Learning with Human Feedback (RLHF), Ouyang, L., Wu, J., Jiang, X., Almeida, D., Wainwright, C. L., Mishkin, P., Zhang, C., Agarwal, S., Slama, K., Ray, A., Schulman, J., Hilton, J., Kelton, F., Miller, L., Simens, M., Askell, A., Welinder, P., Christiano, P., Leike, J., & Lowe, R. (2022). Training language models to follow instructions with human feedback. arXiv preprint arXiv:2203.02155. https://arxiv.org/abs/2203.02155

Feature Image: modernghana.com